NVM Express over PCIe Gen4 Baby!

- Written by: Stephen Bates, CTO

I mentioned in my last blog that NVM Express (NVMe) is fast. Today it got even faster as we demonstrate, what we believe, is the first public domain demo of NVMe running over PCIe Gen4. This doubles the throughput of NVMe and allows systems built around NVMe to achieve performance levels that have been unobtainable before now.

PCIe Gen4 allows for either more performance, less PCIe lanes for a given performance or a combination of both. Many systems today suffer for IO issues or a lack of PCIe lanes and therefore PCIe Gen4 can help address these issues.

NVMe and PCIe

For a long time NVMe was a protocol that was only standardized for PCIe. Although we can now transport NVMe over other physical layers it is fair to say that PCIe remains the only option for direct connections between the CPU and NVMe devices. PCIe is ubiquitous in servers in the sense that IDC predicts about 14 million servers will ship in 2018 and I am sure all 14 million of those will have PCIe capability.

In 2017 the PCIe standardizing body (PCI-SIG) finalized the specification for PCIe Gen4 which doubled the throughput of PCIe from ~1GB/s/lane to ~2GB/s/lane. However, to avail of this performance improvement, both ends of the PCIe connection must support this new speed. While several companies, like Xilinx and Mellanox, have been promoting PCIe Gen4 capable endpoints for a while now it is only recently any CPUs have become available that also support PCIe Gen4.

Rackspace, IBM, Xilinx and Eideticom: NVMe at PCIe Gen4!

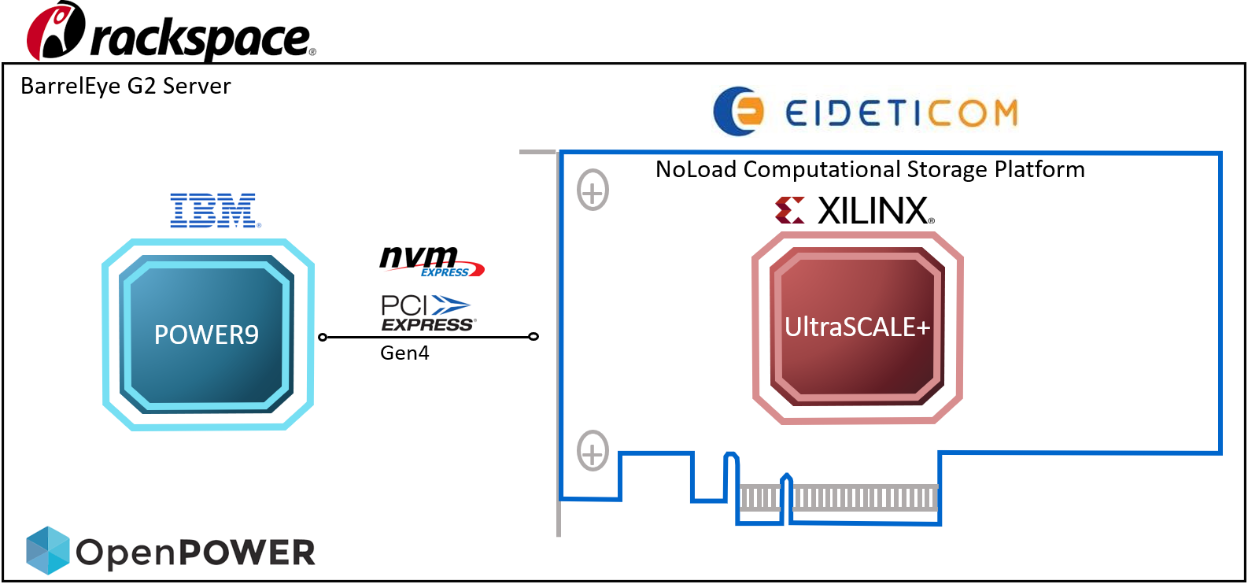

The IBM POWER9 CPU is one of the first CPUs to natively support PCIe Gen4 and we were able to leverage this via the OpenPOWER/Open Compute BarrelEye G2 server donated by our friends at Rackspace. You can see some photos of the inside of the Barreleye 2 with our Eideticom NoLoad™ NVMe card inside it. The Eideticom NoLoad™ is a PCIe attached offload/computational storage engine that communicates with the host CPU via the NVM Express protocol (see here for more information). The BarrlEye G2 is an Open Compute (OCP)/OpenPOWER server developed by Rackspace, Google and other member companies, based on the IBM POWER9 processor.

Figure 1: Some photos of the Eideticom NVMe NoLoad™ card inside the Rackspace BarrelEye G2 server. In top-left you can see the external power supply unit. In bottom-left you can see the two POWER9 CPUs hidden under to orange heat-sinks. In bottom-right you get a good view of the NoLoad™ plugged into one of the PCIe slots on the Zaius motherboard.

Our NoLoad™ NVMe card is based on Xilinx FPGA technology and as I mentioned above Xilinx have been early supporters of PCIe Gen4. We worked with them to upgrade our NVMe NoLoad™ to support PCIe Gen4.

Show me the Money!

As always the proof is in the pudding so we did a short ascinema demo to show our NoLoad™ running NVMe inside BarrelEye G2 over PCIe Gen4. You can see the demo here and I wanted to point out some things to look for.

- The BarrelEye G2 server is running standard Linux. In this case Ubuntu 16.04. So you get a look and feel that is identical to any Linux based server and all your favourite tools, libraries and packages are available via things like apt install.

- You can install and run all your favourite NVMe related tools just like any Linux box. In our example we install nvme-cli and show how we can use that to identify the NVMe controllers and namespaces installed on the system.

- We can install and run fio on BarrelEye G2 in the exact same way you can on any Linux box. Same scripts, same source code, just twice the PCIe/NVMe goodness ;-). In our demo we show about 13GB/s of NVMe read bandwidth over 8 lanes of PCIe, a result that is impossible at PCIe Gen3 where about 7GB/s is the upper limit.

- All the standard PCIe tools (like lspci) work as expected. We will dig into that point in a bit more detail below.

- All the awesome work being done by the Linux kernel development team around NVMe and the block layer comes for free thanks to the kernel deployed on the BarrelEye G2.

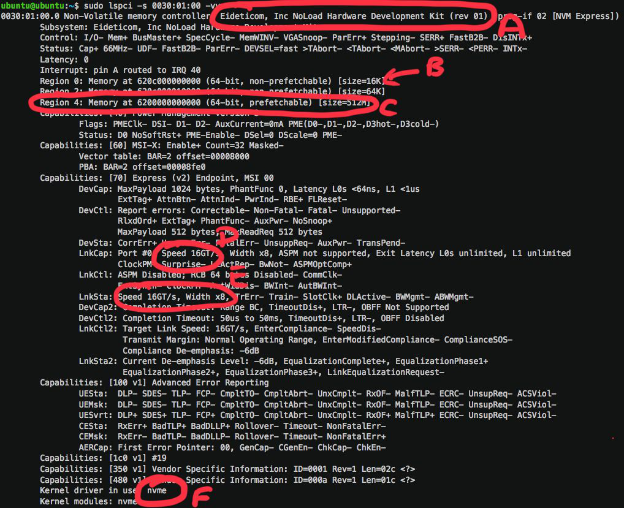

I did want to capture a screenshot from the demo and highlight some things in it (see Figure 2).

Figure 2: lspci -vvv for the Eideticom NoLoad™ inside the BarrelEye G2 server. Some of the more interesting pieces of the output are highlighted and labelled.

Let’s go through the interesting parts of the lspci -vvv output in Figure 2.

- A - Eideticom has registered its vendor ID and device IDs with the PCIe database. This means you get a human-readable description of the NoLoad™ in your system. Note this holds for any system, not just POWER or even Linux for that matter.

- B - The NoLoad™ has three PCIe BARs. BAR0 is 16KB and is the standard NVMe BAR that any legitimate NVMe device must have. The NVMe driver maps this BAR and uses it to control the NVMe device.

- C - The third BAR of NoLoad™ is unique in that it is a Controller Memory Buffer (CMB) which can be used for both NVMe queues and NVMe data. To our knowledge no other device yet supports both queues and data in its CMB. Also note our CMB is pretty big (512MB). We talked more about what we do with this CMB in a previous blog post.

- D - Thanks to our friends at Xilinx we can advertise PCIe Gen4 capability (i.e. 16GT/s). Note in systems that do not support Gen4 we will simply come up at PCIe Gen3.

- E - Thanks to our friends at IBM and Rackspace the CPU and server system support PCIe Gen4 so the link is up and running at Gen4 (16GT/s)! This particular NoLoad™ is x8 so our maximum throughput will be about 14GB/s.

- F - Since our device is a NVMe device it is bound to the standard Linux kernel NVMe driver. This means we get all the goodness and performance of a stable, inbox driver that ships in all major OSes and all Linux distributions. No need to compile and insmod/modprobe a crappy proprietary driver thank you very much!

Where Next?

The PCIe Gen4 ecosystem is just beginning. Eideticom plans to be at the forefront of this transition and as such is working with other CPU, PCIe switch and PCIe End Point vendors who are deploying at PCIe Gen4. Expect to see more annoucements concerning the deployment of Eideitcom’s NoLoad™ in PCIe Gen4 systems soon.

If you are keen to repeat this for yourself you can come talk to us at Eideticom to get one of our NoLoad™ Hardware Eval Kits (HEKs). Barreleye G2 server samples are available from Rackspace and you can contact Adi Gangidi (